OSiRIS is a pilot project funded by the NSF to evaluate a software-defined storage infrastructure for our primary Michigan research universities. OSiRIS will combine a number of innovative concepts to provide a distributed, multi-institutional storage infrastructure that will allow researchers at any of our three campuses to read, write, manage and share their data directly from their computing facility locations.

OSiRIS is a pilot project funded by the NSF to evaluate a software-defined storage infrastructure for our primary Michigan research universities. OSiRIS will combine a number of innovative concepts to provide a distributed, multi-institutional storage infrastructure that will allow researchers at any of our three campuses to read, write, manage and share their data directly from their computing facility locations.

Our goal is to provide transparent, high-performance access to the same storage infrastructure from well-connected locations on any of our campuses. We intend to enable this via a combination of network discovery, monitoring and management tools and through the creative use of CEPH features.

By providing a single data infrastructure that supports computational access on the data “in-place”, we can meet many of the data-intensive and collaboration challenges faced by our research communities and enable these communities to easily undertake research collaborations beyond the border of their own Universities.

Aug 25, 2021 - OSiRIS Project Transitioning to Best-Effort Mode After August 31, 2021

After a successful 6 year prototyping and operations period, the OSiRIS project is transitioning to a best-effort mode for at least the next year. We have provided multiple petabytes of distributed, performant storage for scientists across our three main Universities and beyond. As we make this transition we want to thank all the collaborators and science users who participated in the OSiRIS project. The project team continues to explore possible follow-on projects that could build upon what we have created with OSiRIS. If new opportunities arise we will share details on the osiris-announce@umich.edu mailing list.

Apr 8, 2021 - Use of OSiRIS by SEAS Master's Students

In the winter 2021 term at the University of Michigan, OSiRIS was used by four students in the School of the Environment and Sustainability (SEAS) for their Master’s project, for which the SEAS course number 699 is used.

Sep 16, 2020 - MSU Awarded NSF Grant for Science DMZ

From addressing climate change to developing drug treatments, data is key to finding solutions to many global problems. Thanks to a National Science Foundation grant of nearly half a million dollars, MSU researchers will soon be able to easily share huge volumes of data with peers at institutions around the world, through the creation of a Science DMZ. A demilitarized zone, or DMZ as it is known in cyberinfrastructure, is a portion of the network designed to optimize high-performance for research applications. MSU IT will fund construction and maintenance costs exceeding the NSF award amount and serve as co-principal investigators on the grant with the Institute for Cyber-Enabled Research, or ICER. You can read more about the grant here

Jul 17, 2020 - Ceph Upgrade from Nautilus to Octopus

The OSiRIS team updated the ceph cluster from Nautilus 14.2.9 to Octopus 15.2.4 which is the latest release of Ceph as of August 2020 and it is the fourth release of the Ceph Octopus stable release series.

Major Changes from Nautilus

- A new deployment tool called cephadm has been introduced that integrates Ceph daemon deployment and management via containers into the orchestration layer. For more information see Cephadm

- Health alerts can now be muted, either temporarily or permanently.

- Health alerts are now raised for recent Ceph daemons crashes.

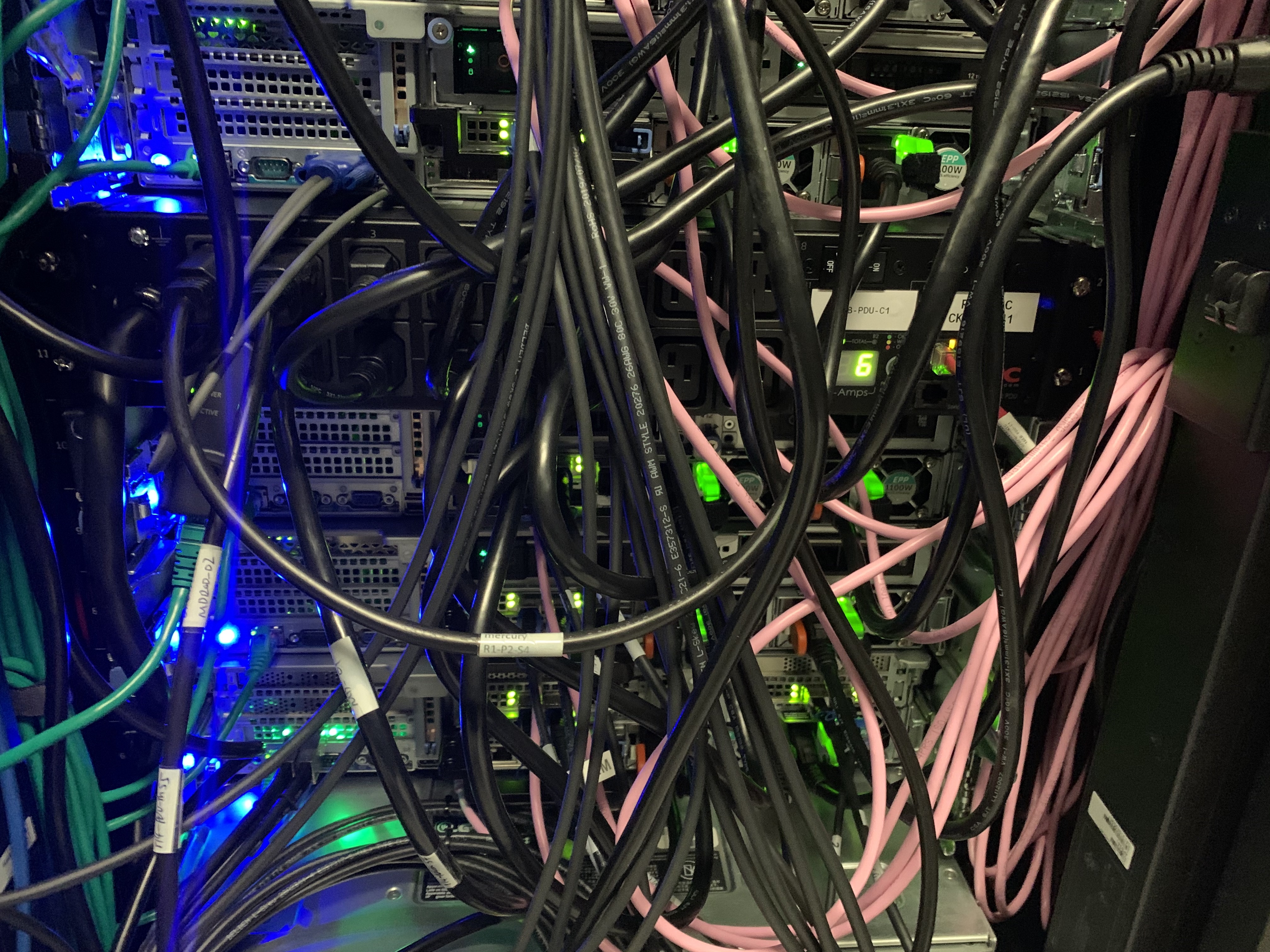

Feb 28, 2020 - New PDU Installed at University of Michigan

OSiRIS intalled a new third Power Distribution Unit [PDU] in rack 16EB to balance the load after the installation of 11 new servers at UM last year.