OSiRIS At Supercomputing 2019

The International Conference for High Performance Computing, Networking, Storage, and Analysis: November 17–22, Colorado Convention Center, Denver, CO

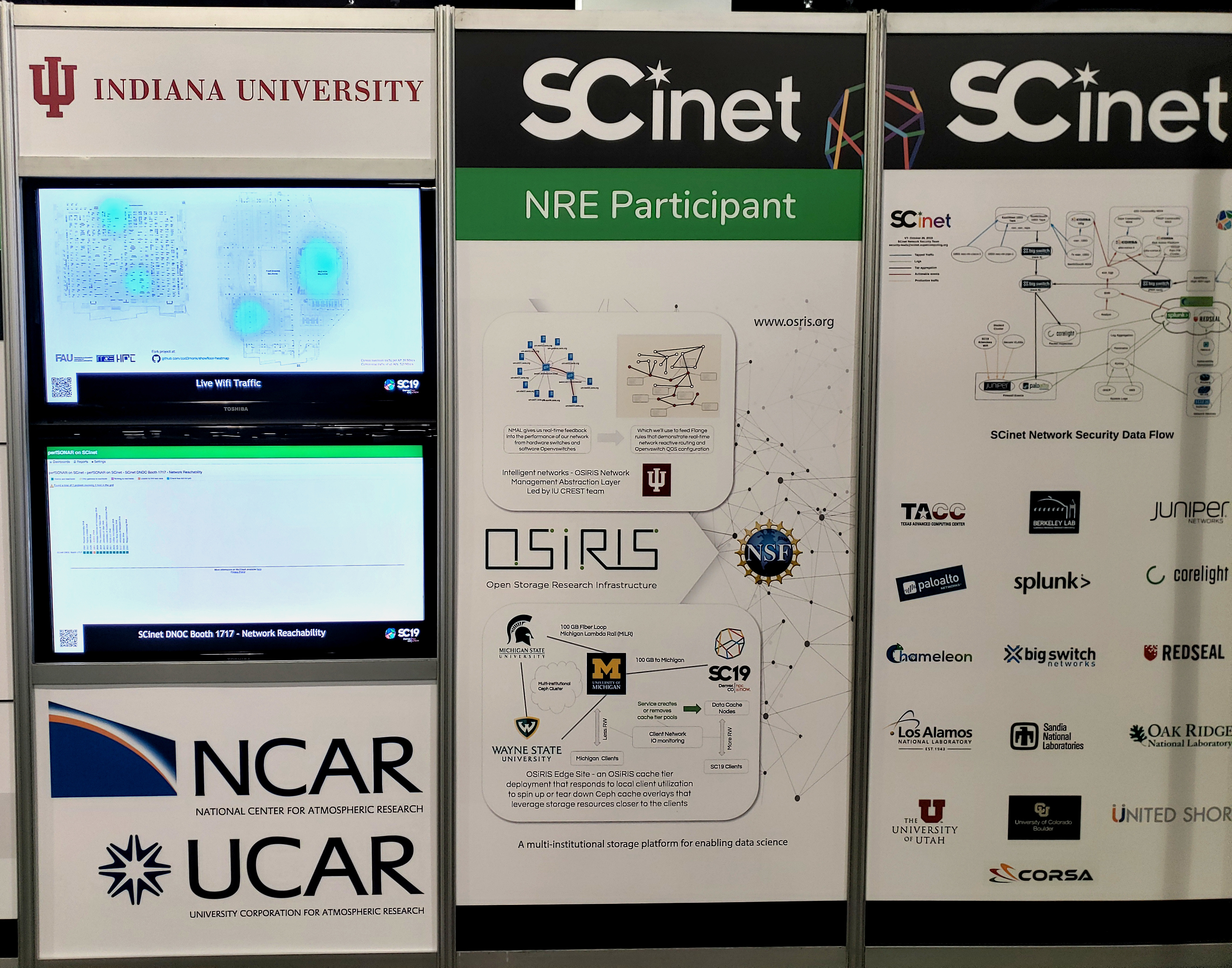

Members of the OSiRIS team traveled to SC19 to deploy a pod of equipment in the booth for OSiRIS and SLATE demos. We gained valuable experience and data on Ceph cache tiering as well as a new ONIE-based switch running SONiC OS.

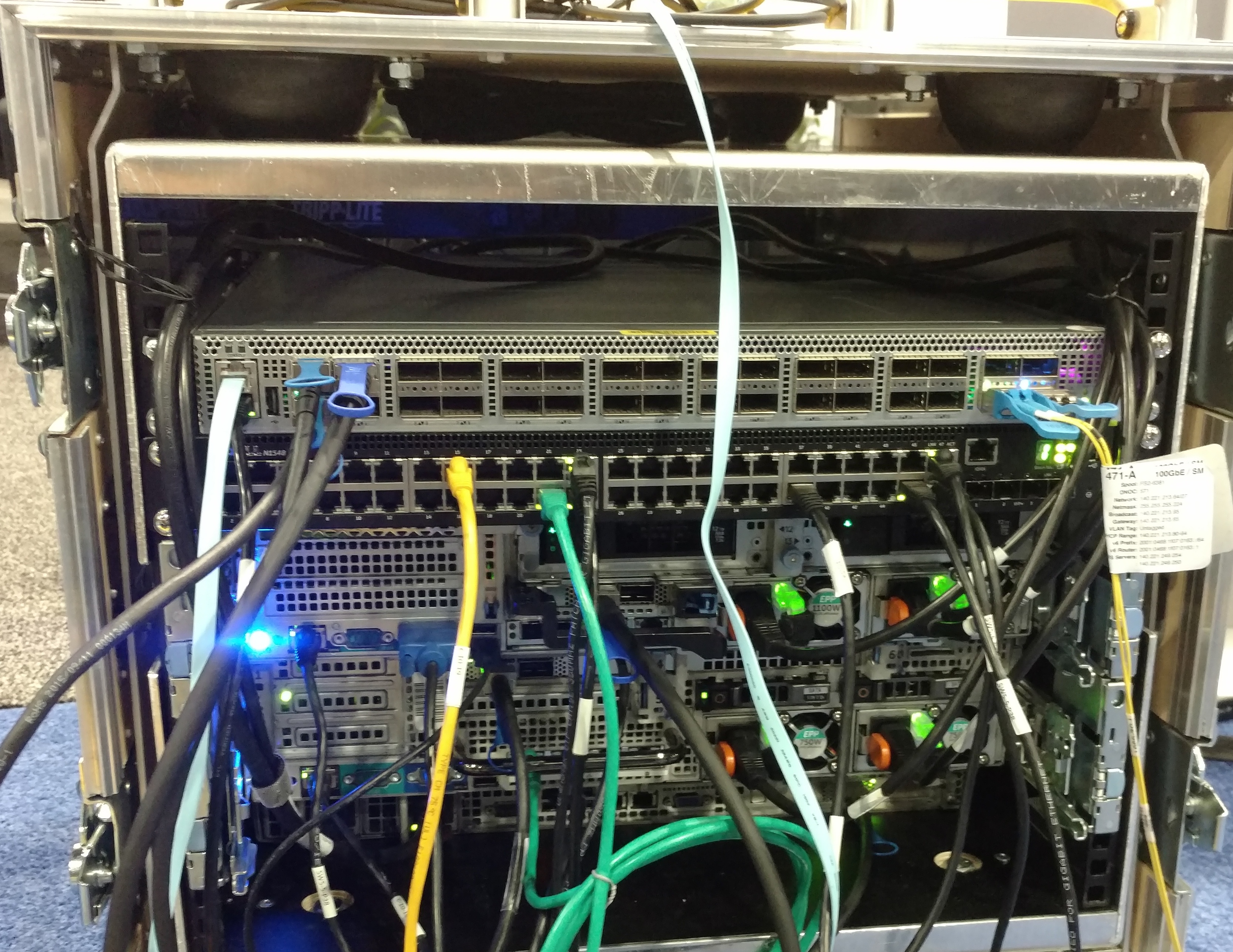

SCInet provides all conference networking. Somewhere in here is our OSiRIS 100Gb fiber path back to Michigan.

SCInet provides all conference networking. Somewhere in here is our OSiRIS 100Gb fiber path back to Michigan.

OSiRIS SC19 Storage and Network Demos

Our demonstration this year was focused on stress-testing Ceph cache tiering for use in responsive, dynamic deployments. We know from previous years that a cache tier can be use to provide IO boosts to geographically local clients. We also know that it becomes a disadvantage for any clients with high latency to the cache. What if we could create a more responsive system for managing caches which spins them up on demand and then removes them once the demand has moved to a different location? That was our goal this year: To find out how our Ceph cluster will respond to rapidly deploying, filling, draining, and removing cache tiers at SC19.

Also in the works was a plan to showcase SDN-based quality-of-service (QoS) techniques using OpenVSwitch, network monitoring, and declarative, programmatic control of the networking using a domain-specific language called Flange incorporated by our NMAL team at IU. The combined tools were to be used to manage the Ceph traffic between our local SC OSiRIS deployment and the WAN links to our core deployment in Michigan. Unfortunately the late arrival of our equipment and difficulties with our switch platform prevented us from collecting significant data in this area.

Ceph Cache Tier Rapid Deployment Tests

OSiRIS already uses Ceph cache tiering for users at the Van Andel Institute in Grand Rapids. It works well for them but for users at other sites who might want to access the same data the tier location is a disadvantage. Either the data needs to be moved to another pool without a cache or the cache drained and removed so other users can access the data more rapidly from storage at our 3 core sites. What we envision is a service that responds to client demands and manages cache pools as needed. Lots of usage at a site with available storage for deploying a cache tier? Spin up a cache. Demand changes, more usage elsewhere? Drain the cache, spin one up where the demand is highest.

So how will Ceph respond to using this feature as we envision? Typical cache deployment is static and unchanged once configured. This is how we have used it so far in production and testing. Our testing at SC19 confirmed that a more dynamic scenario is possible.

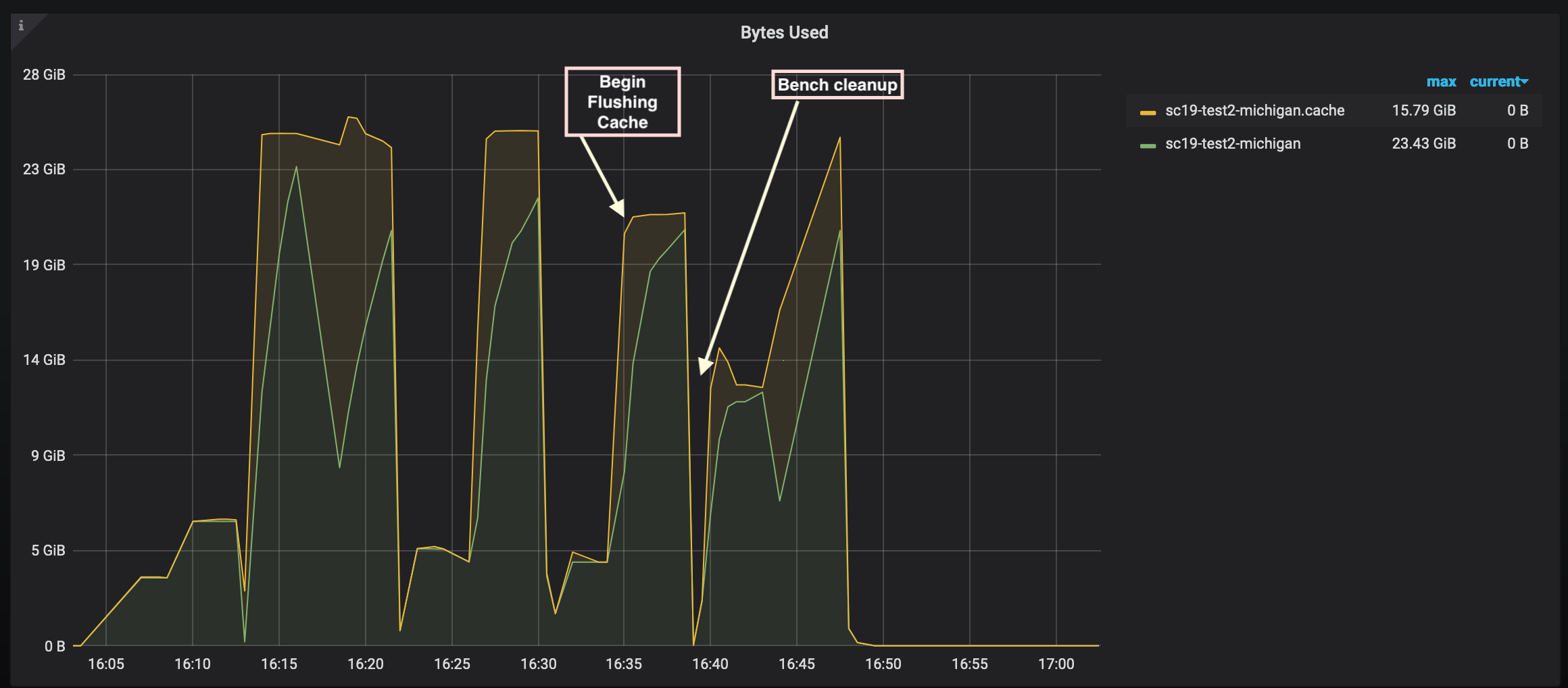

We used a simple script to create a cache tier on NVMe storage at SC19 while simultaneously doing rados read/write benchmarks on several clients. The results were promising! Ceph chugged along happily while creating, filling, draining, and repeating for at least 10 runs. We didn’t see any signs of errors or problems. The only notable thing is that sometimes the ‘cache-flush-evict-all’ command did not completely flush the cache. The overlay remove commands would then fail because the cache was not empty. Repeating the evict command until the cache is truly empty resolves the issue .

Below you can see the interaction between the cache pool sc19-test2-michigan.cache and the backing pool sc19-test2-michigan as we repeatedly fill and flush the cache. Between benchmarks the data was cleaned up.

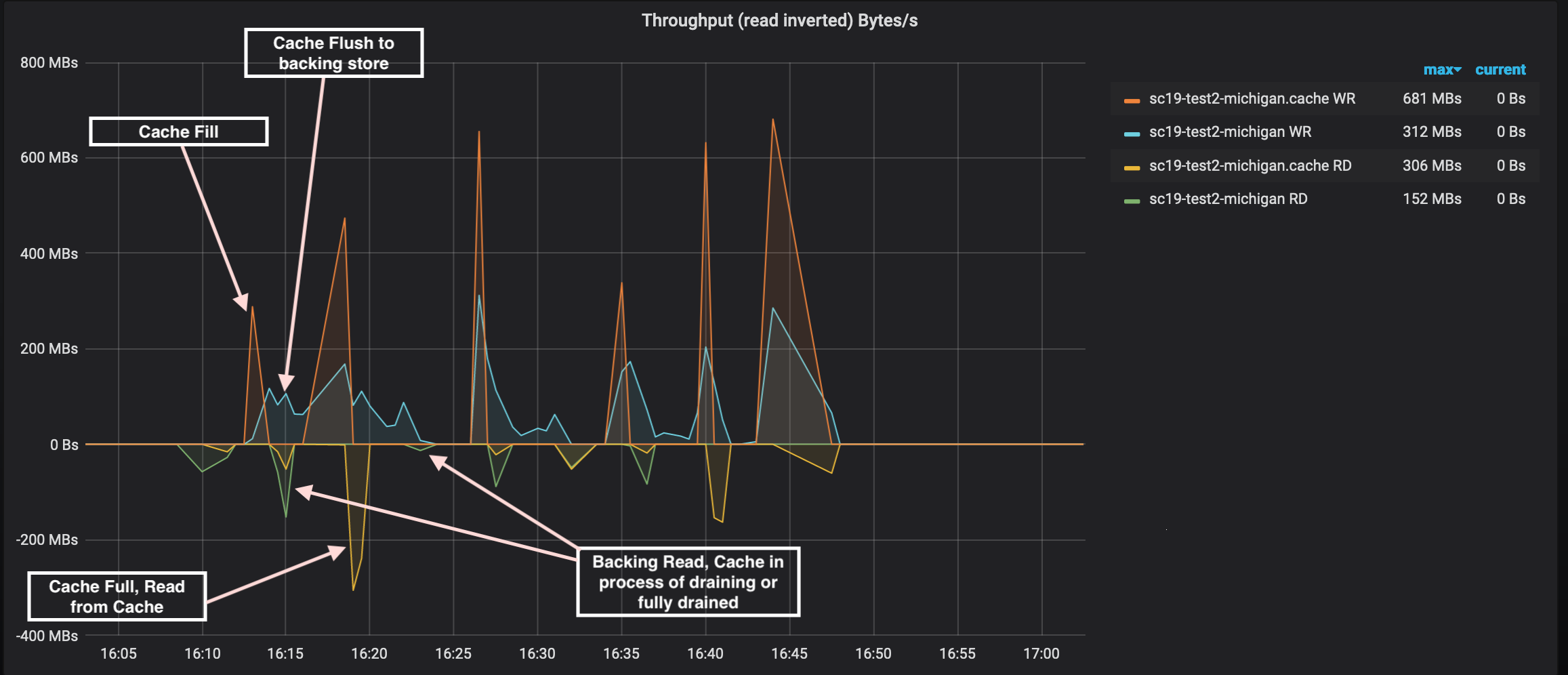

Another view during the test shows data throughput to each pool.

The testing we’re doing here will inform work on a proof-of-concept cache tier module for the Ceph mgr daemon. An early version of the module code is available in this repository. This module should never be used on any production cluster. In fact it does not at this point do much of anything but make a first pass at implementing the basic architecture for creating and tearing down cache tier pools based on geographic location. More information is in the README file.

Open Source Networking: A Learning Experience

Open platform switches running open source software are an exciting development in networking architecture. For Supercomputing and for our continued experimentation an Edge-Core Wedge 100BF-32X switch was acquired. This switch can run a variety of open network operating systems compatible with the Open Network Install Environment (ONIE). There are many available switch hardware platforms with this capability. For the switch OS we chose SONiC.

What we learned about open networking will help us use this type of equipment more effectively in our networking management and automation goals for the project. Open networking OS are a rapidly evolving area. Even in the weeks leading up to Supercomputing a new SONiC release came out which added new CLI capabilities relevant to our configuration. Like many cutting-edge developments there is sometimes a need to spend some time digging into options and configuration that might not be obvious at first glance.

After some initial troubles we were able to use the switch for 100G connectivity to our nodes at the show. A few things we discovered:

- A Null packet sent during initial negotiation would disable the 100G fiber link to SCInet. Our solution? Plug in the TX half of the fiber pair, but leave RX unplugged for a few seconds so the switch never sees the problematic packet. Then plug in RX and everything is good to go! An issue has been opened for further diagnosis.

- Putting switch ports into a VLAN with 'untagged' setting caused all ports to go down. We never did figure out what we were doing wrong here and ended up using a tagged config on the switch and host ports.

- Buffer sizes need to be configured manually based on connected cable length. SONiC has some design and planning documentation on their wiki here and here as well as instructions on manually setting the configuration.

All of this experience was valuable! Supercomputing gives us a chance to try things out in real-world environments. Sometimes the pace can be frantic trying to get everything working but by the end of the week everything was online and our demo running.

Campus Support

We couldn’t have done this without the support from our University campuses! Our campus networking teams at MSU, WSU, and U-M were responsible for coordination, configuration, and testing a 100Gbit path from Michigan to the SC19 floor. Thanks is also due to Merit Networks, Internet 2, Juniper, and Adva for network paths or equipment enabling our 100Gbit link.

The SC19 show booth is a collaboration between U-M Advanced Research Computing and the MSU Institute for Cyber-Enabled Research to promote research computing at our institutions.

Tags